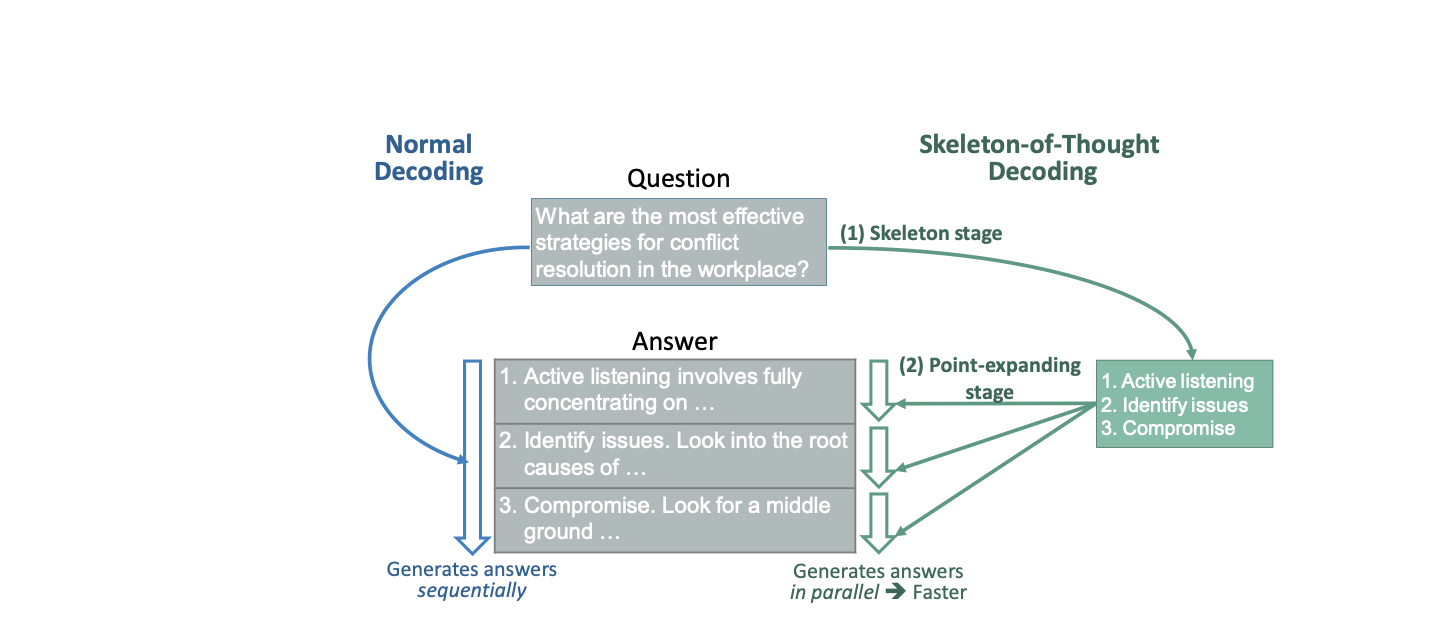

A new prompting method called Skeleton of Thought (SoT). The method is different from other methods because it is built not just to get better outputs, but to make the LLM work faster and more efficiently.

How it works

The Skeleton of Thought framework looks to reduce latency by enabling parallel processing.

At the heart of SoT is the idea of segmenting output generation. Instead of generating a response in a straight line, SoT divides the content into distinct segments.

These segments are processed simultaneously, allowing for multiple parts of an answer to be crafted at once. It’s like writing several sentences of a paragraph in parallel, rather than sequentially.

Prompts

SoT uses two prompts to guide the LLM to generate an output efficiently.

1. Skeleton prompt

The process begins with an initial prompt that instructs the model to produce a structured skeleton of the intended answer. Kind of like bullet points, or an outline.

💬 Prompt: You’re an organizer responsible for only giving the skeleton (not the full content) for answering the question. Provide the skeleton in a list of points (numbered 1., 2., 3., etc.) to answer the question. Instead of writing a full sentence, each skeleton point should be very short with only 3∼5 words. Generally, the skeleton should have 3∼10 points.

Question: What are the typical types of Chinese dishes?

Skeleton:

- Dumplings.

- Noodles.

- Dim Sum.

- Hot Pot.

- Wonton.

- Ma Po Tofu.

- Char Siu.

- Fried Rice.

Question: What are some practical tips for individuals to reduce their carbon emissions?

Skeleton:

- Energy conservation.

- Efficient transportation.

- Home energy efficiency.

- Reduce water consumption.

- Sustainable diet.

- Sustainable travel.

Now, please provide the skeleton for the following question.

{{question}}

Skeleton:

2. Point-Expanding Stage

Next, the LLM is prompted to expand on each point from the list. This expansion happens in parallel, enabling those latency gains we discussed earlier. For models like OpenAI’s this would mean calling their API multiple times for each item in the list.

💬 Prompt: You’re responsible for continuing the writing of one and only one point in the overall answer to the following question.

{{question}}

The skeleton of the answer is

{{skeleton}}

Continue and only continue the writing of point {{point index}}. Write it very shortly in 1∼2 sentence and do not continue with other points!

The Skeleton of Thought framework is mainly focused on reducing latency, rather than increasing quality. Combining this approach with other prompt methods could marry the best of both worlds, but parallel processing of chunks will always have coherency issues.